AI@Home, Part 5: Adding Websearch

Last piece to describe – how to combine large language models with web content. Similar to using local documents for Retrieval Augmented Generation (RAG), it is also possible to use content coming from a search on the web. If you have ever used perplexity.ai, you have seen this already. Personally, I love Perplexity. To a good extent, it has already substituted Google for my daily searches on the web. Not only does it search the web, but it also combines this information and helps to answer very specific questions based on the results of a query on the web.

The simplified process is more or less as follows:

- The user asks a question.

- From that, the LLM generates one or more query terms for a web search.

- One or more searches are triggered.

- For each result link, the content of the referenced page is retrieved.

- All the retrieved content is now the basis for Retrieval Augmented Generation. It serves as a context on which basis the LLM is able to generate an answer.

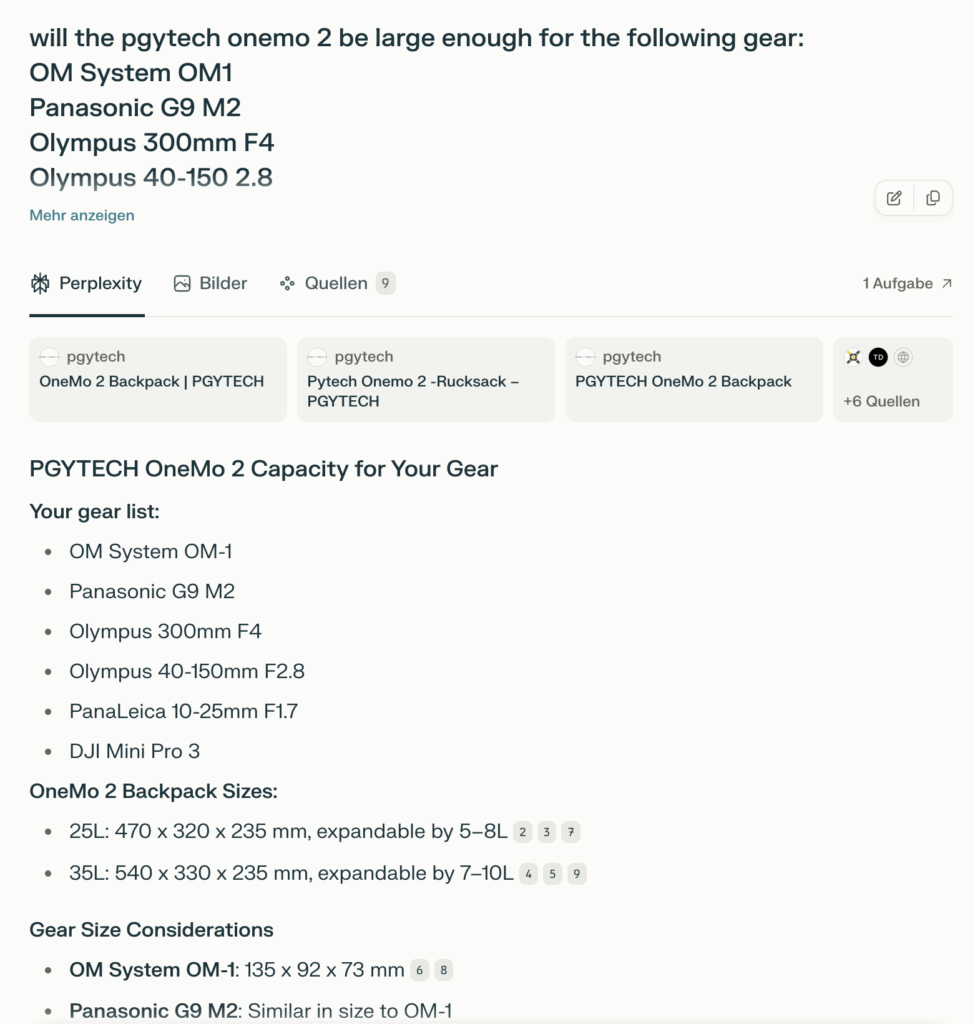

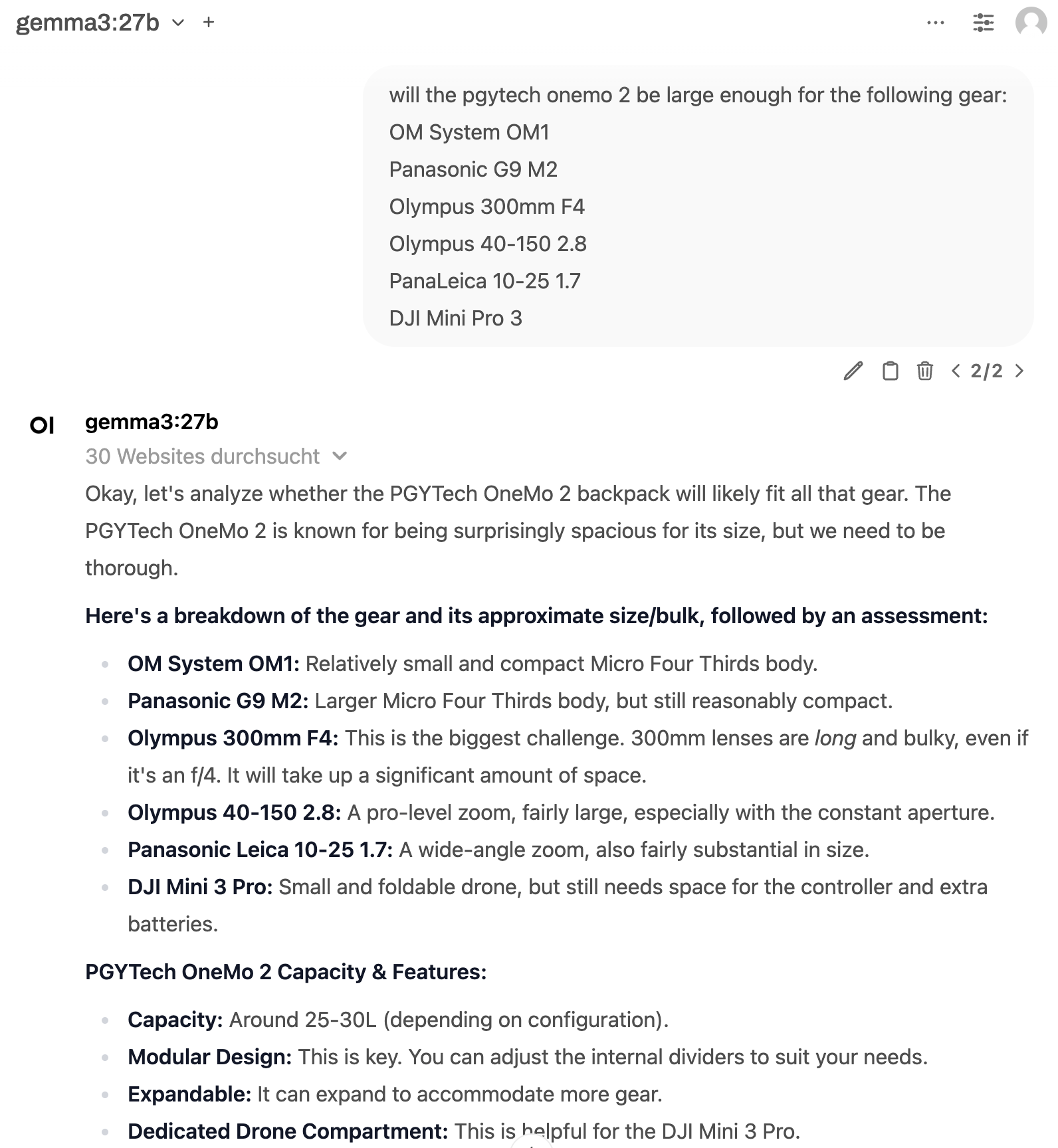

With this approach, it is possible to start quite complex tasks. For example: I was looking for a backpack to contain my photo gear for a planned trip to Namibia. The backpack should be large enough to carry two camera bodies, a couple of lenses, and a drone. From here, you have two options: either you describe your gear and ask the system for a recommendation or you ask if a specific backpack would cover the gear you specified. I tried both approaches; both work. For approach 1, it is really fascinating to observe how the Perplexity literally goes over the specified gear, collects all dimensions, sums everything up, and puts this against the specifications of the backpack.

In principle the is possible also locally, admittedly not in the quality and speed of Perplexity but in still not bad; compare above and below images:

Open WebUI is again the glue in between the language models and the context data out of the web searches. The configuration is done within the administration of Open WebUI. There are several search engine options. Most, if not all, require some kind of service subscription, respectively an API key. The exception is SearXNG. What is SearXNG?

SearXNG is a free and open-source internet metasearch engine that aggregates search results from over 70 different search services and databases, including popular engines like Google, Bing, DuckDuckGo and more. It is a fork of the original Searx project, designed to enhance privacy by not tracking or profiling its users and by removing private data from search requests

Key features of SearXNG include:

- Federated architecture: It is hosted on multiple public and private instances, allowing users to either use public servers or run their own private instance locally or on a server.

- Categorical searching: Results are organized into categories such as Web, Images, Videos, News, Social Media, Music, Files, IT, and Science, providing more structured search results.

- Privacy-focused: It does not store user information or search histories, ensuring anonymity and privacy. It can also be used over Tor for added anonymity.

- Open source and customizable: Users can host their own instance, modify the code, and contribute to the project, supporting digital freedom and decentralization.

As a professing selfhoster, I exactly went for the last bullet point and host this on my Unraid server. Whether Unraid or not, it is very simple. Using plain Docker, it explained very well here, using Unraid it is part of the Unraid Community Story.

Configuration is straight forward. I just changed to port number not avoid collision with other services.

Once, SearXNG is installed and up and running, as a “side effect” it can be used as your very own and private meta search engine.

But next to this obvious use case, it also can be configured to return results in JSON, so that Open WebUI is able to work with the result of the given query. This needs to be enabled in the SearXNG settings.yml file. In case of Unraid, this file is located under /mnt/user/appdata/searxng/. In this file, there may or may not be a section called formats. Here you need to maker sure, json is configured as well, so it should look like this:

# remove format to deny access, use lower case.

# formats: [html, csv, json, rss]

formats:

- json

- htmlThat is all but of course there a ton of other options to configure, such as as search engines (Google, Bing, Duck-Duck …) uses in the background.

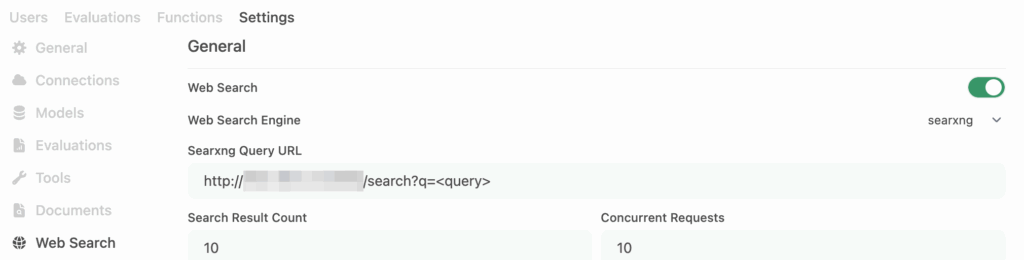

In Open WebUI the search is configured in the admin panel, again this straight forward. Just make sure to configure correctly the host and the way the search term is given to SearXNG. My configuration looks as follows:

After that, Open WebUI will enable a button Web Search, which will trigger SearXNG to be accessed with search terms generated by the LLM. The results will be taken, crawled and taken as a context for the LLM to answer the given prompt.

Voila – poor man’s perplexity.ai