AI@Home, Part 6: Connecting Obsidian (or other Applications) to your local AI

Using local AI also means you can connect whatever you want and don’t have to worry about any additional costs. So, in addition to my Photo Tagging Jobs, which runs once a week and creates tags and titles for my photos in Immich, I have also connected my note-taking program, Obsidian.

Obsidian is a powerful, free note-taking application that stores your notes locally as plain text Markdown files, organized in a folder called a “vault”. Unlike many cloud-based note apps, Obsidian keeps your data on your device, giving you full control and privacy, and making your notes easily accessible and future-proof. If you ever stop using Obsidian, your notes remain as standard text files. Obsidian is highly flexible and customizable, supporting plugins, themes, and advanced workflows. It is available on all major platforms (Windows, macOS, Linux, iOS, Android), and can be used for everything from simple note-taking to managing complex projects, writing, or even publishing content online

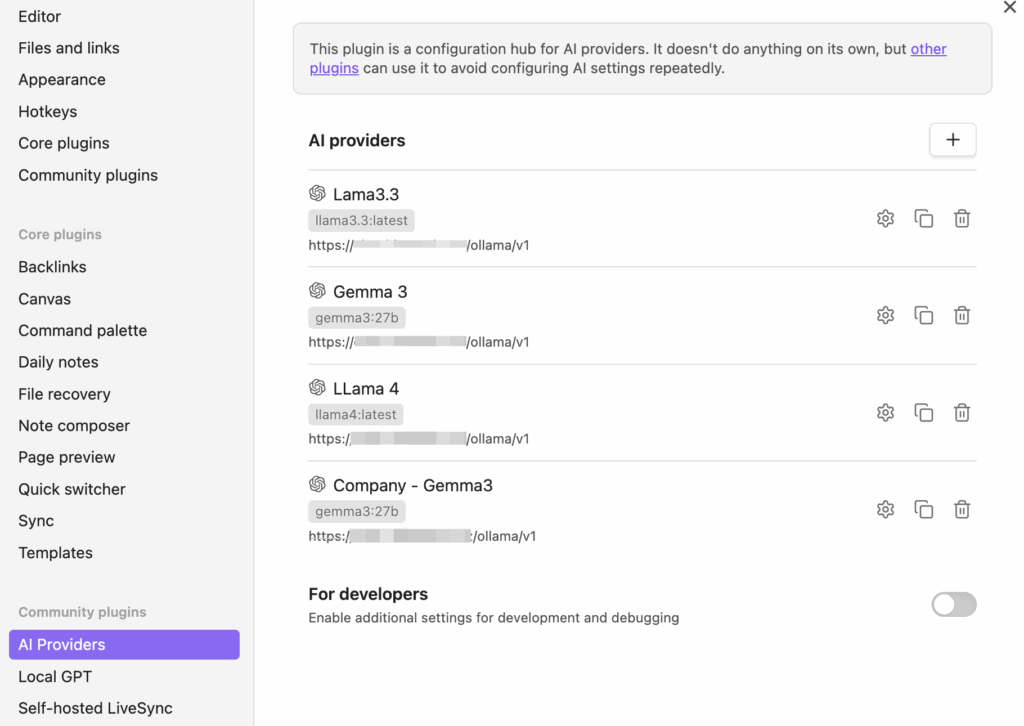

Talking about plugins, there are two plugins I use to connect my local AI: AI Providers and Local GPT. They work in combination.

The AI Providers plugin acts as a configuration hub for connecting Obsidian to various AI backends. It supports local models via Ollama, as well as OpenAI models or OpenAI-compatible services—this is how I have configured it.

The Local GPT plugin then uses an AI provider to enable AI-assisted actions on your notes. It features context menu actions such as summarizing, continuing writing, and fixing grammar on selected text. Moreover, it also allows you to extend these actions with custom actions, which are based on defined prompts.

AI Providers configuration

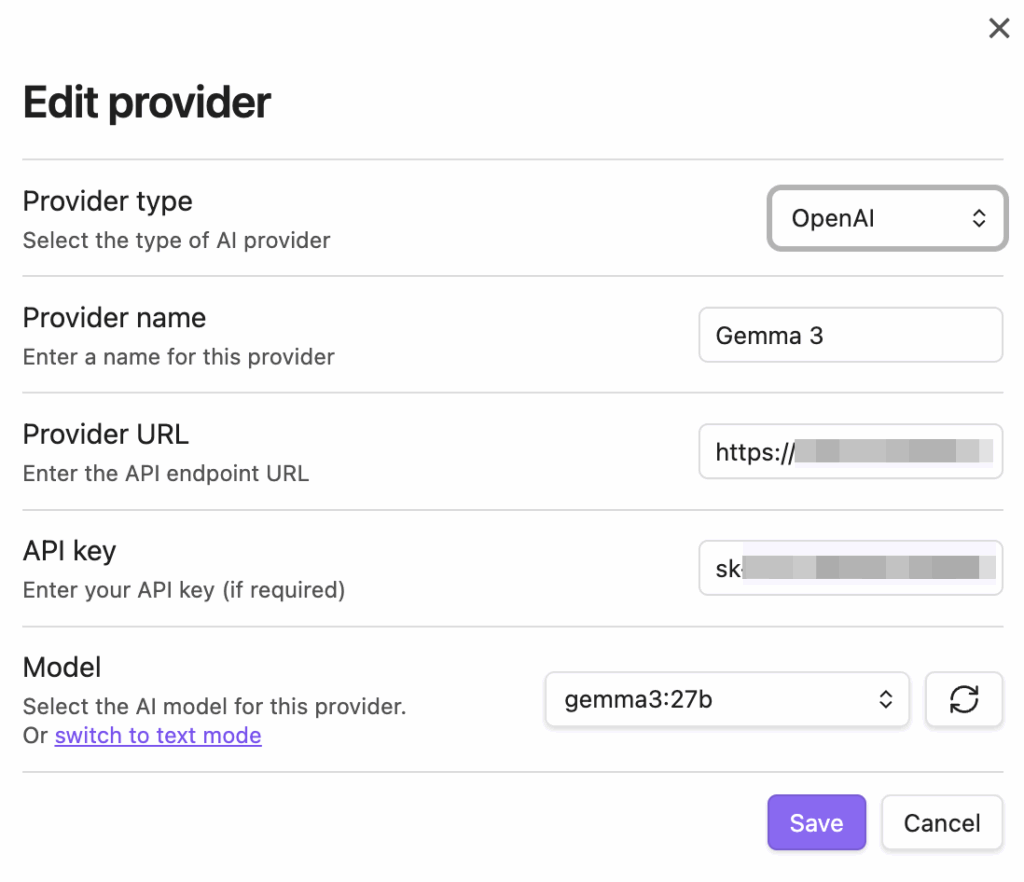

Let’s look at the configuration of AI Providers. As you can see, I have configured a couple of AI providers. Basically, for every model I want to use, one provider is configured. The provider configuration itself looks as follows:

Note the provider type. As I’m using Open WebUI in front of Ollama, and Ollama offers OpenAI-compatible endpoints, I can use my local models as a drop-in replacement for ChatGPT. The API key is coming from Open WebUI. Under Account Settings, a key can be generated.

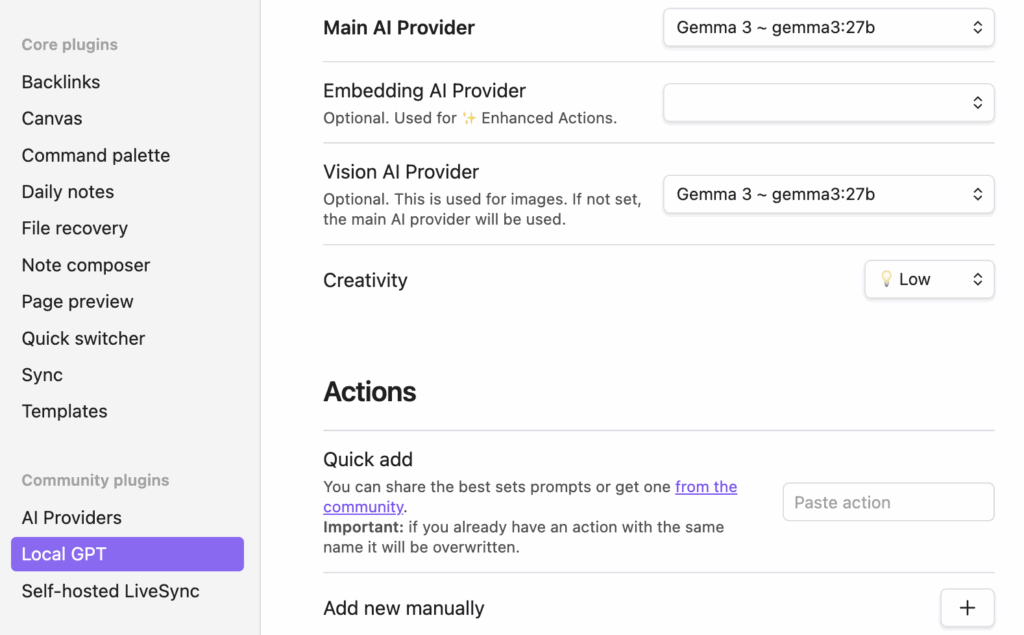

Local GPT configuration

This configuration is pretty straight forward, it comes down to configure the provider, all providers are available but only one at a time is in use.

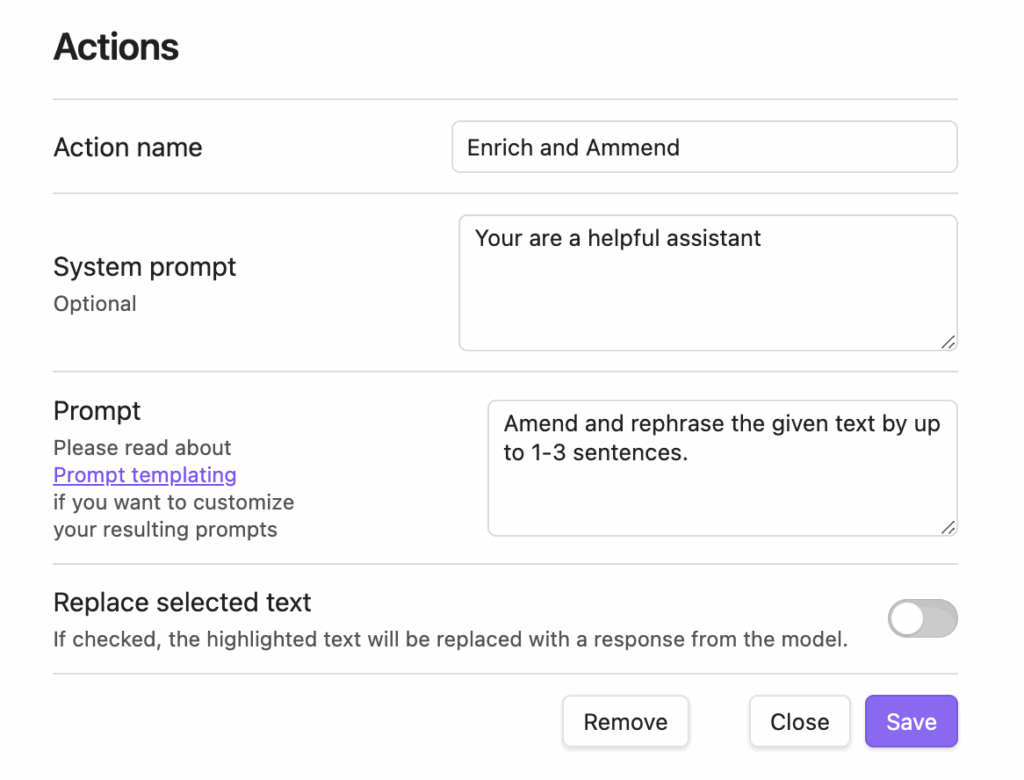

More interesting is the configuration of the actions. The predefined actions are General Help, Continue Writing, Summarize, Fix Spelling and Grammar, and Find Action Items. Looking into the actions, they differ in their prompts. If none of the actions matches what you need, the actions can be extended with additional ones. Again, this comes down to simply specifying the missing action with a proper prompt.

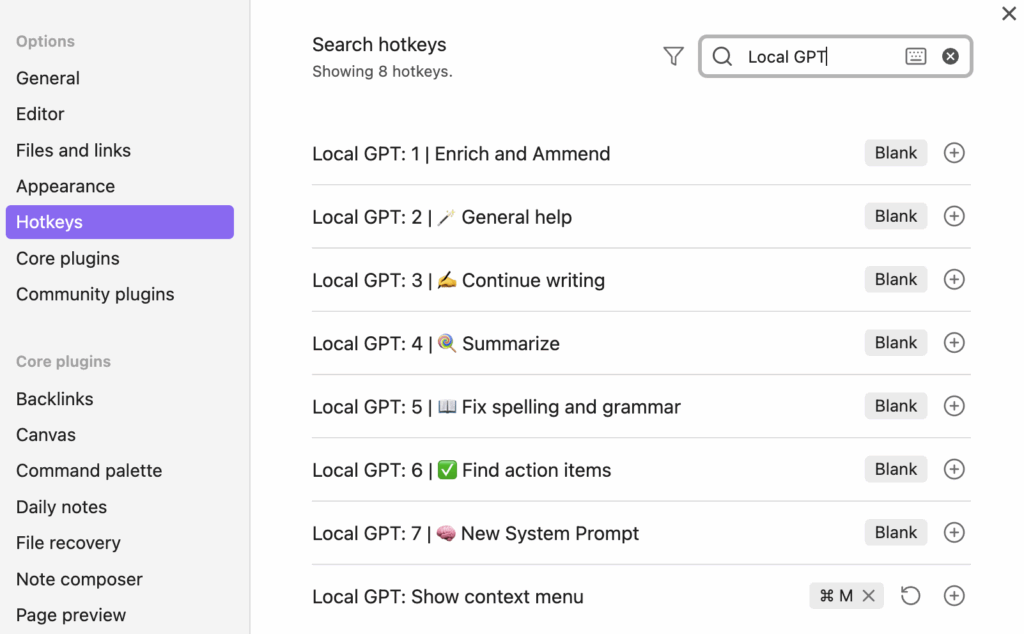

The last thing to do is assign the actions to a hotkey/shortcut so they can be accessed. This is done in the Obsidian settings under Hotkeys. Filter for “Local GPT” and define some hotkeys for the actions. I just configured the Context Menu, so when I select some text, I can access all actions with the Local GPT context menu, which displays all the configured actions.

Once everything is configured, this is how it looks in action:

You will see the corrected text above 😉